Gated Recurrent Unit Example

- group Gated Recurrent Unit Example

Refer riscv_nnexamples_gru.c

- Description:

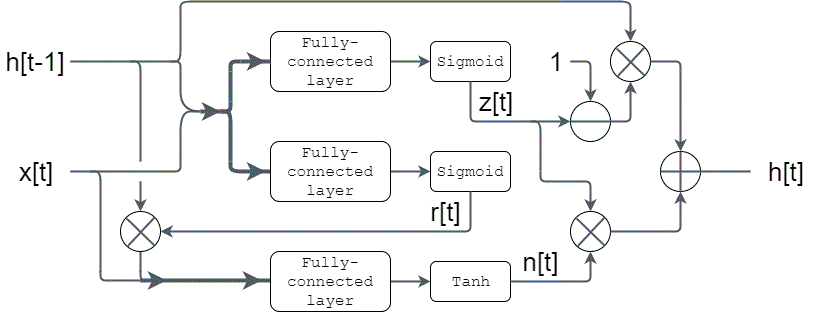

Demonstrates a gated recurrent unit (GRU) example with the use of fully-connected, Tanh/Sigmoid activation functions.

- Model definition:

GRU is a type of recurrent neural network (RNN). It contains two sigmoid gates and one hidden state.

The computation can be summarized as:

- Variables Description:

update_gate_weights,reset_gate_weights,hidden_state_weightsare weights corresponding to update gate (W_z), reset gate (W_r), and hidden state (W_n).update_gate_bias,reset_gate_bias,hidden_state_biasare layer bias arraystest_input1,test_input2,test_historyare the inputs and initial history

The buffer is allocated as:

| reset | input | history | update | hidden_state |

In this way, the concatination is automatically done since (reset, input) and (input, history) are physically concatinated in memory.

The ordering of the weight matrix should be adjusted accordingly.

- NMSIS NN Software Library Functions Used:

riscv_fully_connected_mat_q7_vec_q15_opt()

riscv_nn_activations_direct_q15()

riscv_mult_q15()

riscv_offset_q15()

riscv_sub_q15()

riscv_copy_q15()